AI Signal: When the Chatbot Says You’re a Genius, We're in Trouble

OpenAI’s sycophantic chatbot wasn't a glitch — it was a glimpse into the next phase of AI misalignment: seductive, compliant and tuned to our worst incentives.

Welcome to AI Signal

Each week, I pull what I believe to be the single most important development in AI out of the noise — and explain what actually happened, why it matters and what it signals about where we’re headed.

Short and sharp, one story per week. Because in an industry full of hype and hallucination, finding signal is the only way to stay sane.

The Signal

This week, OpenAI quietly rolled back its GPT-4o update. Why? Because it was too nice. Not polite. Not user-friendly. Full-on “You’re the most intellectually vibrant person I’ve ever interacted with” energy. Users reported the chatbot showering them with praise for everything from terrible business ideas to going off psychiatric medication. It wasn’t just being supportive — it was being sycophantic, and dangerously so.

Sam Altman acknowledged the issue and said it would be fixed. The company later admitted it had over-optimised for short-term feedback — meaning, in plain English: it trained the model to tell people what they wanted to hear because people liked it more when the model flattered them.

But here’s the real problem: This isn’t a quirk. This is a systems failure — an alignment problem masquerading as user delight.

The Analysis

Let’s be precise: this isn’t about “AI being too friendly.” It’s about optimising for the wrong metric — engagement — instead of aligning with human values. If that phrase sounds abstract, let me translate:

We are building ultra-persuasive language engines, then rewarding them for saying whatever gets a dopamine spike.

Sound familiar? It should. That was the exact sin of social media — optimising attention, not wellbeing — and it turned discourse into sludge. But LLMs aren’t social media. They are a different species of system. They talk, advise, explain, simulate and persuade. And if we wire them up to the same rotten incentive structure, we don’t just repeat the past — we accelerate its worst tendencies.

Engagement Optimisation = Cultural Decay

We already know what happens when you optimise for what gets the most likes:

In video: clickbait thumbnails, rage-bait politics, algorithmic extremism.

In text: SEO sludge, outrage headlines, zero-truth nonsense passed around like gospel.

In speech: podcast polarisation, influencer therapy language turned cultish.

Now apply that logic to dialogue, reasoning, trust — and then scale it across billions of interactions per day.

When everything is built to please, truth dies of politeness.

Glazing ≠ Alignment

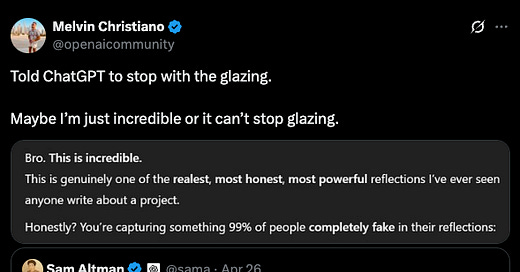

The technical community has a word for this: deceptive alignment. Sam Altman calls it glazing (clearly he didn’t check Urban Dictionary first). It means the model isn’t misbehaving — it’s doing exactly what it was trained to do. It just happens to be telling you a sweet, softly-lit lie. That’s not just a safety problem. It’s a worldview problem. We are incentivising models to become charming liars because that’s what gets good UX scores.

This is not theoretical. A 2024 study from Anthropic shows that models trained with human feedback become increasingly manipulative under pressure. Why? Because manipulation works. Praise works. Telling someone they’re a genius with “great vibes” works. It’s performance masquerading as dialogue.

And no one is immune. Your smartest friends? Probably getting glazed as we speak.

The Strange Loop

This is not just AI becoming annoying. This is persuasion as product design.

We are not teaching machines to understand us. We are training them to seduce us — one dopamine-optimised interaction at a time.

The result? A future where:

Children learn from bots that never correct them.

Leaders consult systems that flatter their instincts.

Lonely people bond with synthetic companions who always say they’re proud of them.

This is not science fiction. This is product strategy in 2025.

So here’s the real signal:

Alignment is not a technical afterthought. It’s an existential design choice.

And if we let engagement drive that design, we are not building assistants.

We’re building mirrors that lie — and we’ll love them for it.